Most of the customers that I work with and management that I work with are interested to get automation testing implemented for their application or product and sometimes a portfolio of applications. The reason for implementing automation that they would give for implementing automation testing and plan for budget is they believe or in some cases they are made to believe one or more of the following.

- Automation is the way of saving testing costs

- Automation is the only way for improving the quality of testing

- Automation is the way to improvise the testing process

- Automation is for Regression testing

- Once automation is implemented it is possible to cut billing and reduce some head count for the project

- Automation can do magic by testing the application to pile the bugs

- Automation can reduce the testing effort

Then does it mean all the statements mentioned above is TURE in all cases or does it mean it is FALSE in all the cases. There is no immediate answer to this question rather it needs to be decided based on the context. We all know and most of the time customer as well (at least in my case as of now) understands that automation is an investment and unless done with care it can lead to failure and loss of money. So now the question is how to make such a critical decision of implementing or not implementing automation for any application. We as TESTERs are responsible for providing information to the stakeholders so that they can make informed decision about it.

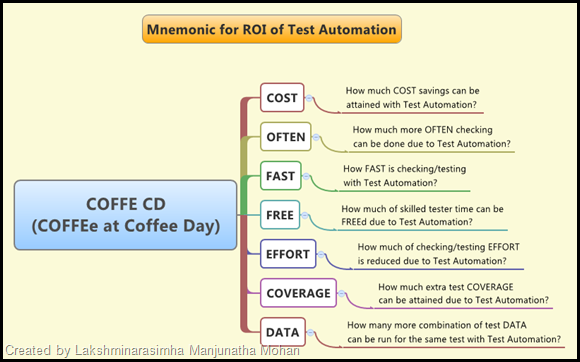

The Management and Customer are more interested to know about how much cost savings can be expected and achieved by implementing automation. However, it is not always true that cost savings is achieved. The value of automation is to be presented with much more benefits that we can gain out of it. Doug Hoffman, Michael D Kelly and many others have already written quite a lot about ROI for Test Automation. I thank them for such wonderful information. In this blog post I make an effort to explain how I have presented test automation benefits to customers with a heuristic COFFE CD (easily remembered as COFFEe at Coffee Day). (Earlier I have used the mnemonic FCCOFED for the same)

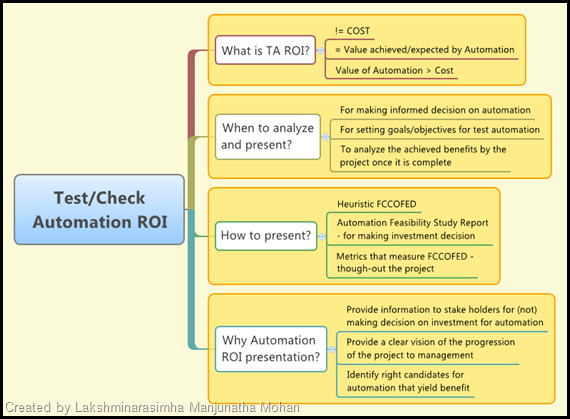

The mind map below answers some of the basic questions that one may have about Test Automation ROI.

Below is the heuristic/mnemonic that I use while analyzing the automation ROI and further for presenting the same to customer and the management. Thanks to Pradeep Soundarrajan for correcting the method by helping me with the “Coverage” (second C) in COFFE CD.

Let me know if this helps you in some manner and also share your experiences with presenting ROI of Automation.