Effort Estimation has been an art since a long time and it will continue to be an art. Especially estimating time for testing effort is usually hard because of various uncertainties. That too when in an Agile/SCRUM environment where change is quite natural it is very hard to estimate. Here is an estimation methodology that I have used and successful to a good extent.

This methodology is specifically suited for distributed agile teams where development and manual testing of the application is done by one team while test automation is done by another team.(it can be used anywhere else as well) Here, in this scenario the Product Owner of the automation team is a member of the development sprint team who is a subject matter expert.

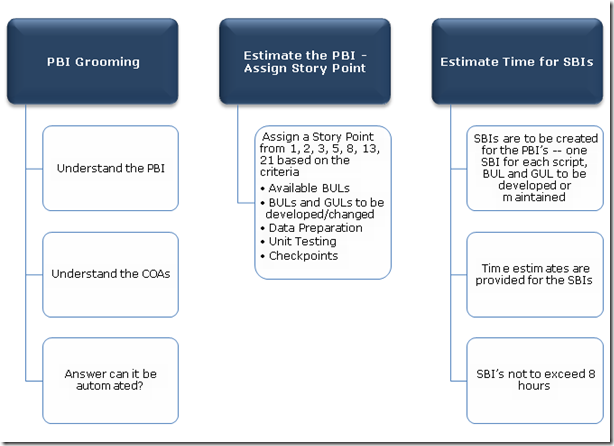

Although in SCRUM usually the estimations are done during the sprint planning, it is insisted to estimate before the sprint planning meeting during the Product Backlog Items (PBIs) Grooming sessions as the team would be developing automation scripts independent of the development sprints and have reasonably good understanding of the application and does not need the Product Owner’s(PO) presence in estimating or understanding the PBIs. However, in case if there is a need for POs involvement in understanding the PBI it is recommended to estimate in the grooming meeting or after it but necessarily after understanding the PBIs.

Note that the story point estimation does not provide the effort estimation as such rather it represents the complexity and size of the task in terms of relative complexity. Based on the knowledge of Sprint Velocity (Rate of productivity) one can identify the effort in terms of time and cost.

For example: If you estimate 100 story points and have the velocity as 4 story points per hour then your team would need about 25 hours to complete the 100 story points which can be further planned based on the capacity (Available time) of the team for the sprint.

Story Point Estimation

- PBIs are estimated based on the Agile/SCRUM principles of estimation in terms of story points

- PBI’s are estimated during the Grooming sessions

- PBI’s are estimated by all the team members and arrived at an amicable number that is considered as the actual estimate

- For story points Fibonacci series of numbers 1, 2, 3, 5, 8, 13, 21 are considered

- PBI with 1 story point is considered the least complex while 21 is considered to be relatively most complex

- Story points are assigned to reflect the complexity and size of the PBI based on the relative complexities

- For estimating the story point for a PBI, below criteria needs to be considered

- Available (Already developed) Business Utility Libraries that can be reused – More the BULs available for reuse less complex is the PBI

- BULs to be developed – Number of BULs to be developed and their complexities are considered

- General Utility Libraries to be developed/changed -- Number of GULs to be developed/changed and their complexities are considered

- Data Preparation – Amount of Test Data to be prepared and its complexity is considered

- Unit Testing -- Complexity of unit testing the script is considered

- Checkpoints – The number of checkpoints to be implemented based on the COAs and their complexity is considered

- Reviews – Consider the effort for review of the automation script

- Following Rules are to be followed while estimating story points for automation.

- All the team members estimate individually for a given PBI

- To avoid influences of one team member to other, Planning Poker is played. Planning Poker is an estimation game where all the team members estimate separately and disclose their estimates together at the same time as per the Scrum Master’s (SM) instruction

- Most of the time highest Estimate wins the race - only if all the team members and SM are in agreement

- SM is responsible for handling discrepancies in estimates between team members

- Discrepancies in estimates between the team is handled by Poker Votes, Reasoning and discussion as applicable and decided by the SM

- Sprint Backlog Items(SBI) are to be created for the PBI’s -- one SBI for each script, BUL and GUL to be developed or maintained. This is basically decomposing the PBI into multiple components called as SBIs for automation.

- Time estimates are provided for the SBIs – based on the velocity

- “Estimate” should be set once and not adjusted

- SBI’s not to exceed 8 hours – In case if a SBI cannot be broken any more and on breaking it loses the logic then the estimate can exceed 8hours for a SBI.

- Previous sprint Velocity (Rate of Productivity) and the capacity of the team is to considered for projecting the velocity of the current sprint

-- LN

TESTER by INSTINCT, not by CHANCE.